I enjoyed this course a lot, even though I didn't have the chance to give it all I could have. I learnt a lot of valuable lessons regarding time management this study period which I will carry over into the next.

Thanks to Peter and Cynthia!

See you all next study period.

Chris

Thursday, February 26, 2009

Wednesday, February 4, 2009

Concepts Assignment - 4 - Automation

Concept 10 - Automation

“...using various automated processes can make one question the reliability of the results you receive…by surrendering control over information finding to others we are either lulled into false security or constantly nagged by doubts that the process is time-efficient but quality-inefficient.”

Automation is the act of executing repetitious processes, and computers are able to do this exceedingly fast and efficiently. It is a key part of the Internet yet not all of its automated processes are beneficial. Spam is possibly the biggest automated online nuisance today and it can, and usually does, affect anybody with an email address. The most effective method we have of counteracting spam is through the use of more automation. However, as we will see, automation facilitates Spam more than it prevents it.

For spam to still exist means that some people actually make purchase that spam offers, so spammers want to increase the chance of sales by sending to as many email addresses as possible. One way for spammers to find email addresses is through the use of Spambots. A Spambot is an automated computer program that scans the internet for email addresses aiming to build email databases (Microsoft Exchange Definitions - Spambot, 2005). The sheer size of the Internet means that the number of email addresses that are ‘harvested’ by Spambots is huge, and spammers can use the resulting databases to propagate their unsolicited email, in hope of attracting sales. Our ability to fight back against spammers is through the use of automated filters. Two common types are IP and word filters.

Internet Service Providers use automated programs to look for blasts of emails originating from the same IP address. When these programs find an IP address that they suspect of spamming, they can block it. This sounds effective, but spammers are able to change their IP with the ISP filters none the wiser (Stopping Spam, n.d). They can continue on until the new IP is blocked, and the process starts again, meaning IP filtering is not able to totally prevent spam.

Word filters scan messages as they come through your email program, looking for key words which are blacklisted as being typical spam words (Stopping Spam, n.d). They don’t have to necessarily be single words, but can also be chains of words forming a sentence such as “check out this free offer!”. When the filter finds a blacklisted word or chain of words, the message is blocked and quarantined. The problem here is that they could potentially block a legitimate email that contains these words. It is possible to add rules which allow certain people through, but if you get an email from someone that does not have a rule, you could potentially never read it. This is not ideal, especially in a business situation. Spammers also use tricks to circumvent words filters, such as spelling words slightly differently so that word filters don’t recognise them, therefore word filters are not 100% effective in preventing spam.

As we can see, automation easily allows spammers to continuously scan for new email addresses and build databases quickly. On the other hand, automated filters are always struggling to keep up with spam, and spammers are always finding ways to get around them. Therefore automation facilitates Spam more than it prevents it.

Site 1:

Mullane, G. S. (n.d.). Spambot Beware. Retrieved January 25, 2009, from Turn Step: http://www.turnstep.com/Spambot/harassment.html

This site contains some really good information on how to ‘harass’ Spambots as they attempt to scan your site. By harass I mean make it hard if not impossible for the Spambot to do its job. There are automated process which attempt to trap and break Spambots by overloading them, although they are not so reliable with your system resources or software. The word limit prevented me from divulging on these automated processes, but none the less they also show why automation is not as effective in dealing with spam as it is allowing it.

Site 2:

Claburn, T. (2005, February 5). Spam Costs Billions. Retrieved January 25, 2009, from Information Week: http://www.informationweek.com/news/security/vulnerabilities/showArticle.jhtml?articleID=59300834

Another thing I would have liked to go into but couldn’t because of the word count was the how automation’s inability to prevent spam is costing business billions of dollars every year, thus rendering it cost-inefficient in this sense. This is not directly a profit to spammers, but essentially business is paying for spammers to spam. This is extremely relevant, because automation is the only weapon we have to fight with. As long as Spammers have the upper hand with automation, then it will continue to cost us severely.

Bibliography

Microsoft Exchange Definitions - Spambot. (2005, 3 21). Retrieved January 25, 2009, from SearchExchange.com: http://searchexchange.techtarget.com/sDefinition/0,,sid43_gci896167,00.html

Stopping Spam. (n.d.). Retrieved January 25, 2009, from How Stuff Works: http://computer.howstuffworks.com/spam3.htm

“...using various automated processes can make one question the reliability of the results you receive…by surrendering control over information finding to others we are either lulled into false security or constantly nagged by doubts that the process is time-efficient but quality-inefficient.”

Automation is the act of executing repetitious processes, and computers are able to do this exceedingly fast and efficiently. It is a key part of the Internet yet not all of its automated processes are beneficial. Spam is possibly the biggest automated online nuisance today and it can, and usually does, affect anybody with an email address. The most effective method we have of counteracting spam is through the use of more automation. However, as we will see, automation facilitates Spam more than it prevents it.

For spam to still exist means that some people actually make purchase that spam offers, so spammers want to increase the chance of sales by sending to as many email addresses as possible. One way for spammers to find email addresses is through the use of Spambots. A Spambot is an automated computer program that scans the internet for email addresses aiming to build email databases (Microsoft Exchange Definitions - Spambot, 2005). The sheer size of the Internet means that the number of email addresses that are ‘harvested’ by Spambots is huge, and spammers can use the resulting databases to propagate their unsolicited email, in hope of attracting sales. Our ability to fight back against spammers is through the use of automated filters. Two common types are IP and word filters.

Internet Service Providers use automated programs to look for blasts of emails originating from the same IP address. When these programs find an IP address that they suspect of spamming, they can block it. This sounds effective, but spammers are able to change their IP with the ISP filters none the wiser (Stopping Spam, n.d). They can continue on until the new IP is blocked, and the process starts again, meaning IP filtering is not able to totally prevent spam.

Word filters scan messages as they come through your email program, looking for key words which are blacklisted as being typical spam words (Stopping Spam, n.d). They don’t have to necessarily be single words, but can also be chains of words forming a sentence such as “check out this free offer!”. When the filter finds a blacklisted word or chain of words, the message is blocked and quarantined. The problem here is that they could potentially block a legitimate email that contains these words. It is possible to add rules which allow certain people through, but if you get an email from someone that does not have a rule, you could potentially never read it. This is not ideal, especially in a business situation. Spammers also use tricks to circumvent words filters, such as spelling words slightly differently so that word filters don’t recognise them, therefore word filters are not 100% effective in preventing spam.

As we can see, automation easily allows spammers to continuously scan for new email addresses and build databases quickly. On the other hand, automated filters are always struggling to keep up with spam, and spammers are always finding ways to get around them. Therefore automation facilitates Spam more than it prevents it.

Site 1:

Mullane, G. S. (n.d.). Spambot Beware. Retrieved January 25, 2009, from Turn Step: http://www.turnstep.com/Spambot/harassment.html

This site contains some really good information on how to ‘harass’ Spambots as they attempt to scan your site. By harass I mean make it hard if not impossible for the Spambot to do its job. There are automated process which attempt to trap and break Spambots by overloading them, although they are not so reliable with your system resources or software. The word limit prevented me from divulging on these automated processes, but none the less they also show why automation is not as effective in dealing with spam as it is allowing it.

Site 2:

Claburn, T. (2005, February 5). Spam Costs Billions. Retrieved January 25, 2009, from Information Week: http://www.informationweek.com/news/security/vulnerabilities/showArticle.jhtml?articleID=59300834

Another thing I would have liked to go into but couldn’t because of the word count was the how automation’s inability to prevent spam is costing business billions of dollars every year, thus rendering it cost-inefficient in this sense. This is not directly a profit to spammers, but essentially business is paying for spammers to spam. This is extremely relevant, because automation is the only weapon we have to fight with. As long as Spammers have the upper hand with automation, then it will continue to cost us severely.

Bibliography

Microsoft Exchange Definitions - Spambot. (2005, 3 21). Retrieved January 25, 2009, from SearchExchange.com: http://searchexchange.techtarget.com/sDefinition/0,,sid43_gci896167,00.html

Stopping Spam. (n.d.). Retrieved January 25, 2009, from How Stuff Works: http://computer.howstuffworks.com/spam3.htm

Concepts Assignment - 3 - Meta Data

11 - The relationship of data to meta-data

A great failing of most web browser and management software is its inability to allow people to easily organize and reorganise information, to catalogue and sort it, thereby attaching their own metadata to it. Without the physical ability to sort, annotate, sort and resort, it is harder to do the cognitive processing necessary to make the data ‘one’s own’, relevant to the tasks that you are using it for, rather than its initially intended uses. New forms of ‘organisation’ need to be found, and new software to make it work.(Allen, n.d.)

I’ve chosen this concept because I think it is becoming outdated. There are now opportunities to organise, reorganise, catalogue, share and sort our online information, particularly bookmarks. Applications are available online that allow users to add Meta Data to their bookmarks and we will see that this is becoming an extremely powerful tool in everyday web browsing.

Sometimes it can take a long time to find the information you are looking for on the net. Search engines can help, but often it requires filtering through a lot of irrelevant sites before you come across quality information. One good thing is that when we finally find these quality sites we can bookmark them, allowing us to return whenever we want without having to find the site again. Can you imagine all the bookmarks saved on computers all over the world? Bookmarks that have been saved by real human beings who can analyse the quality of a document instead of a search engine algorithm that can only understand the words that a document contains. Imagine if there was a way in which you could search other people’s quality bookmarks in order to find the best information possible. Well now you can. It is facilitated by a new online application called Social Bookmarking (Arundel, 2005).

Social Bookmarking is an online application that allows users to store, organise and share bookmarks (Learn More about Delicious, n.d.). However instead of storing bookmarks in our web browser, we store them in our online profile on our Social Bookmarking website. For the sake of this argument we’ll talk about Delicious, one of the more popular Social Bookmarking sites. Delicious allows us to save our bookmarks on a centralised server, meaning that we can retrieve our bookmarks from any computer connected to the Internet. However the most powerful feature of Delicious is the ability to add Meta Data to our bookmarks. We can tag our bookmarks with keywords in order to classify them. So if you found a site with a good recipe you could tag it with the keywords ‘pork’, ‘casserole’. Then when you want to find it again you just need to search for those words in order to retrieve it. Because we are on a social network we can then make our bookmarks public which means anybody can see them. So if someone from another country searches for a page with the Meta Data ‘pork casserole’, your bookmark will come up in a list ordered by ranking. Ranking is determined by the number of Delicious users who have saved a particular bookmark.

An example of another useful feature would be if you were doing an assignment. With Delicious you could tag each of these pages with the words ‘Science’, ‘Assignment’ and make them private which means other users cannot see those bookmarks. This Meta Data allows you to search for those words, and all the information you found that is relevant will show up. By making it private you prevent people from finding that information which may not be relevant to their own search of ‘science assignment’.

Meta Data and Social Bookmarking give us the opportunity to organise our bookmarks as well as to search for others that may be more relevant than what a search engine presents. Therefore user generated Meta Data is becoming a powerful tool in everyday web browsing.

Site 1:

Bartholme, J. (2007, April 8). Benefits of Being Saved on Delicious. Retrieved February 1, 2009, from Jason Bartholme's Blog: http://www.jasonbartholme.com/benefits-of-being-saved-on-delicious/

Jason’s post talks about the advantages of getting your page saved on Delicious. By having meta data attached to it in Delicious, people can search for you page much like they would in a search engine, therefore drawing visitors to your site. I think that this whole concept of user generated search tags will become more important in the future, and while I’m not saying it will rival Google, it will allow search results to become more relevant as it is real people who are tagging it with relevant keywords.

Site 2:

7 Things You Should Know About Social Bookmarking. (2005, May). Retrieved February 1, 2009, from Educause: http://educause.edu/ir/library/pdf/ELI7001.pdf

This downloadable PDF is a great introduction to Social Bookmarking. This plainly states what it does, plus advantages and disadvantages. It draws a good point when it places criticism on the fact that a lot of bookmarking is done by amateurs. Meta Data that is irrelevant or insufficient to categorize a particular source is an outcome of this, thus lowering the relevancy of searches. However it brings back the light and explains the potential that social bookmarking and user generated Meta Data has in saving us time when trying to find relevant data.

Bibliography

Arundel, R. (2005). Social Bookmarking Tools for Collaberation and Interaction. Retrieved February 1, 2009, from Speaking and Marketing Tips: http://www.speakingandmarketingtips.com/social-bookmarking.html

Learn More about Delicious. (n.d.). Retrieved February 1, 2009, from Delicious: http://delicious.com/help/learn

A great failing of most web browser and management software is its inability to allow people to easily organize and reorganise information, to catalogue and sort it, thereby attaching their own metadata to it. Without the physical ability to sort, annotate, sort and resort, it is harder to do the cognitive processing necessary to make the data ‘one’s own’, relevant to the tasks that you are using it for, rather than its initially intended uses. New forms of ‘organisation’ need to be found, and new software to make it work.(Allen, n.d.)

I’ve chosen this concept because I think it is becoming outdated. There are now opportunities to organise, reorganise, catalogue, share and sort our online information, particularly bookmarks. Applications are available online that allow users to add Meta Data to their bookmarks and we will see that this is becoming an extremely powerful tool in everyday web browsing.

Sometimes it can take a long time to find the information you are looking for on the net. Search engines can help, but often it requires filtering through a lot of irrelevant sites before you come across quality information. One good thing is that when we finally find these quality sites we can bookmark them, allowing us to return whenever we want without having to find the site again. Can you imagine all the bookmarks saved on computers all over the world? Bookmarks that have been saved by real human beings who can analyse the quality of a document instead of a search engine algorithm that can only understand the words that a document contains. Imagine if there was a way in which you could search other people’s quality bookmarks in order to find the best information possible. Well now you can. It is facilitated by a new online application called Social Bookmarking (Arundel, 2005).

Social Bookmarking is an online application that allows users to store, organise and share bookmarks (Learn More about Delicious, n.d.). However instead of storing bookmarks in our web browser, we store them in our online profile on our Social Bookmarking website. For the sake of this argument we’ll talk about Delicious, one of the more popular Social Bookmarking sites. Delicious allows us to save our bookmarks on a centralised server, meaning that we can retrieve our bookmarks from any computer connected to the Internet. However the most powerful feature of Delicious is the ability to add Meta Data to our bookmarks. We can tag our bookmarks with keywords in order to classify them. So if you found a site with a good recipe you could tag it with the keywords ‘pork’, ‘casserole’. Then when you want to find it again you just need to search for those words in order to retrieve it. Because we are on a social network we can then make our bookmarks public which means anybody can see them. So if someone from another country searches for a page with the Meta Data ‘pork casserole’, your bookmark will come up in a list ordered by ranking. Ranking is determined by the number of Delicious users who have saved a particular bookmark.

An example of another useful feature would be if you were doing an assignment. With Delicious you could tag each of these pages with the words ‘Science’, ‘Assignment’ and make them private which means other users cannot see those bookmarks. This Meta Data allows you to search for those words, and all the information you found that is relevant will show up. By making it private you prevent people from finding that information which may not be relevant to their own search of ‘science assignment’.

Meta Data and Social Bookmarking give us the opportunity to organise our bookmarks as well as to search for others that may be more relevant than what a search engine presents. Therefore user generated Meta Data is becoming a powerful tool in everyday web browsing.

Site 1:

Bartholme, J. (2007, April 8). Benefits of Being Saved on Delicious. Retrieved February 1, 2009, from Jason Bartholme's Blog: http://www.jasonbartholme.com/benefits-of-being-saved-on-delicious/

Jason’s post talks about the advantages of getting your page saved on Delicious. By having meta data attached to it in Delicious, people can search for you page much like they would in a search engine, therefore drawing visitors to your site. I think that this whole concept of user generated search tags will become more important in the future, and while I’m not saying it will rival Google, it will allow search results to become more relevant as it is real people who are tagging it with relevant keywords.

Site 2:

7 Things You Should Know About Social Bookmarking. (2005, May). Retrieved February 1, 2009, from Educause: http://educause.edu/ir/library/pdf/ELI7001.pdf

This downloadable PDF is a great introduction to Social Bookmarking. This plainly states what it does, plus advantages and disadvantages. It draws a good point when it places criticism on the fact that a lot of bookmarking is done by amateurs. Meta Data that is irrelevant or insufficient to categorize a particular source is an outcome of this, thus lowering the relevancy of searches. However it brings back the light and explains the potential that social bookmarking and user generated Meta Data has in saving us time when trying to find relevant data.

Bibliography

Arundel, R. (2005). Social Bookmarking Tools for Collaberation and Interaction. Retrieved February 1, 2009, from Speaking and Marketing Tips: http://www.speakingandmarketingtips.com/social-bookmarking.html

Learn More about Delicious. (n.d.). Retrieved February 1, 2009, from Delicious: http://delicious.com/help/learn

Concepts Assignment - 2 - Frames

Concept 30 - Frames: the information-display challenge

“Websites can be created in many ways, using a variety of display techniques. One well-used, but also widely criticised approach is to use frames. While it is useful to understand frames technically (so as to allow users easy printing, navigating, saving and searching), it is also important to see them as an example of an underlying conceptual struggle between information and display.”(Allen, n.d.)

Frames are useful for loading dynamic content, but when it comes to statically positioned elements that have static content (which this paper is focused on) there is little if not any need for them. A major downfall of frames is their inability to maximise Search Engine Optimisation, which is extremely desirable in order to attract visitors to your page over a rivals. As we will see, CSS provides an alternative method to frames that facilitates good SEO principles.

An element that we will use to demonstrate where CSS can replace the use of frames is statically positioned navigation menus. Navigation menus act like the gateway for web crawlers (the agents sent out by search engines to index the web) to go deeper into your site structure in order to index it. What some web developers may not realise however is that frames hamper a web crawler’s ability to do this, and as a result, sites that use frames cannot be indexed correctly.

All web crawlers use hyperlinks (HREFs - the html attribute that links your pages together) to traverse through a website (Aharonovich, 2006). However because frames display outsourced content, many web crawlers cannot see the HREFs contained in that content, therefore they are unable to access and index the deeper layers of the site. SEO wise, this is not good. Some Web Crawlers are able to access a frame’s content through its SRC attribute (the reference to the outsourced content) but this poses new problems (Search Engine Marketing FAQ, n.d). When the Web Crawler indexes that page, it indexes it as an independent entity, outside of the main page structure. That means that when users click on it from a search engine, instead of taking them to the main website with the information they searched for in its containing frame, the page will be displayed by itself out of context. This is not desirable as your page will not be displayed in the way that w as intended. Thus, frames are a cause of major concern when thinking about maximising the SEO potential of a website.

CSS can be used instead of frames to position elements within a webpage. It is able to do this with the attribute ‘position: fixed’ which displays an HTML element in a static position on the screen. But unlike frames it can do this without limiting the SEO potential of a web site.

A DIV tag is one HTML than can be positioned with ‘position: fixed’. The difference between a DIV tag and a frame is that its content is already embedded within the main document and doesn’t have to be externally loaded. So when a web crawler comes to your newly designed website, it can see your navigation menu embedded within a DIV (or similar HTML element) and thus can traverse the hyperlinks that it contains.

It should be quite obvious after seeing the Search Engine Optimisation disadvantages of frames, that when it comes to important statically positioned elements such as menus, frames are not a good option. CSS is able to offer the full potential of Search Engine Optimisation, while still maintaining the ability to statically position elements. Therefore CSS provides an alternative method to frames that facilitates good Search Engine Optimisation principles.

Site 1:

Why frames and search engine optimization don’t mix. (n.d.). Retrieved January 25, 2009, from Pandia: http://www.pandia.com/sew/500-frames.html

For further investigation into why frames are bad to use, pandia.com points out clearly the way frames can destroy the external linking capabilities of a site by doing a sort of case study on an online store. In an online store, external linking is an absolute must in order to drive sales from different referrals. We see framesets in context and by clicking through the shop you can see how the URL doesn’t change for each particular item, thus eliminating any possibility for external buyers to be linked to any particular product. This is a good case of a store struggling between information (it’s products) and display (it’s stores presentation).

Site 2:

Kyrnin, J. (n.d.). What is Ajax? Retrieved January 21, 2009, from About.com: http://webdesign.about.com/od/ajax/a/aa101705.htm

I’m including this website because even though CSS can replace frames for statically positioning elements, it can’t replace the functionality that frames offer. The ability to dynamically load content is probably a framesets biggest strength. Dynamic loading of content is very important in Web 2.0, and this site explains that there is a way in which this can be achieved without frames while still being able to maintain SEO principles. For people who want SEO maximised, while still having the benefits of dynamically loading content, then this site should be investigated.

Bibliography

Aharonovich, E. (2006, November 28). How Web Crawlers Work. Retrieved January 25, 2009, from The Code Project: http://www.codeproject.com/KB/tips/Web_Crawler.aspx

Search Engine Marketing FAQ. (n.d.). Retrieved January 25, 2009, from SEO Logic: http://www.seologic.com/faq/frames-html-links.php

“Websites can be created in many ways, using a variety of display techniques. One well-used, but also widely criticised approach is to use frames. While it is useful to understand frames technically (so as to allow users easy printing, navigating, saving and searching), it is also important to see them as an example of an underlying conceptual struggle between information and display.”(Allen, n.d.)

Frames are useful for loading dynamic content, but when it comes to statically positioned elements that have static content (which this paper is focused on) there is little if not any need for them. A major downfall of frames is their inability to maximise Search Engine Optimisation, which is extremely desirable in order to attract visitors to your page over a rivals. As we will see, CSS provides an alternative method to frames that facilitates good SEO principles.

An element that we will use to demonstrate where CSS can replace the use of frames is statically positioned navigation menus. Navigation menus act like the gateway for web crawlers (the agents sent out by search engines to index the web) to go deeper into your site structure in order to index it. What some web developers may not realise however is that frames hamper a web crawler’s ability to do this, and as a result, sites that use frames cannot be indexed correctly.

All web crawlers use hyperlinks (HREFs - the html attribute that links your pages together) to traverse through a website (Aharonovich, 2006). However because frames display outsourced content, many web crawlers cannot see the HREFs contained in that content, therefore they are unable to access and index the deeper layers of the site. SEO wise, this is not good. Some Web Crawlers are able to access a frame’s content through its SRC attribute (the reference to the outsourced content) but this poses new problems (Search Engine Marketing FAQ, n.d). When the Web Crawler indexes that page, it indexes it as an independent entity, outside of the main page structure. That means that when users click on it from a search engine, instead of taking them to the main website with the information they searched for in its containing frame, the page will be displayed by itself out of context. This is not desirable as your page will not be displayed in the way that w as intended. Thus, frames are a cause of major concern when thinking about maximising the SEO potential of a website.

CSS can be used instead of frames to position elements within a webpage. It is able to do this with the attribute ‘position: fixed’ which displays an HTML element in a static position on the screen. But unlike frames it can do this without limiting the SEO potential of a web site.

A DIV tag is one HTML than can be positioned with ‘position: fixed’. The difference between a DIV tag and a frame is that its content is already embedded within the main document and doesn’t have to be externally loaded. So when a web crawler comes to your newly designed website, it can see your navigation menu embedded within a DIV (or similar HTML element) and thus can traverse the hyperlinks that it contains.

It should be quite obvious after seeing the Search Engine Optimisation disadvantages of frames, that when it comes to important statically positioned elements such as menus, frames are not a good option. CSS is able to offer the full potential of Search Engine Optimisation, while still maintaining the ability to statically position elements. Therefore CSS provides an alternative method to frames that facilitates good Search Engine Optimisation principles.

Site 1:

Why frames and search engine optimization don’t mix. (n.d.). Retrieved January 25, 2009, from Pandia: http://www.pandia.com/sew/500-frames.html

For further investigation into why frames are bad to use, pandia.com points out clearly the way frames can destroy the external linking capabilities of a site by doing a sort of case study on an online store. In an online store, external linking is an absolute must in order to drive sales from different referrals. We see framesets in context and by clicking through the shop you can see how the URL doesn’t change for each particular item, thus eliminating any possibility for external buyers to be linked to any particular product. This is a good case of a store struggling between information (it’s products) and display (it’s stores presentation).

Site 2:

Kyrnin, J. (n.d.). What is Ajax? Retrieved January 21, 2009, from About.com: http://webdesign.about.com/od/ajax/a/aa101705.htm

I’m including this website because even though CSS can replace frames for statically positioning elements, it can’t replace the functionality that frames offer. The ability to dynamically load content is probably a framesets biggest strength. Dynamic loading of content is very important in Web 2.0, and this site explains that there is a way in which this can be achieved without frames while still being able to maintain SEO principles. For people who want SEO maximised, while still having the benefits of dynamically loading content, then this site should be investigated.

Bibliography

Aharonovich, E. (2006, November 28). How Web Crawlers Work. Retrieved January 25, 2009, from The Code Project: http://www.codeproject.com/KB/tips/Web_Crawler.aspx

Search Engine Marketing FAQ. (n.d.). Retrieved January 25, 2009, from SEO Logic: http://www.seologic.com/faq/frames-html-links.php

Concepts Assignment - 1 - Information and Attention

33. Information and attention

In this kind of economy, the most valuable commodity is people’s attention (which can be, for example, bought and sold in the advertising industry): successful websites and other Internet publications / communications (says Goldhaber) are those which capture and hold the increasingly distracted attention of Internet users amidst a swirling mass of informational options.(Allen, n.d.)

As I began to investigate this concept further, I began to read Goldhaber’s paper called ‘The Attention Economy and The Net’. He introduces some ideas that not many people are aware of, namely how we make transactions with our inherent wealth of attention. His text illustrates the importance of understanding the new attention economy. As we will see, in the future the attention economy will become more important to understand.

Every day we make transactions without even reaching for our wallet. When, for example, a TV ad captures our interest, somebody comes up and talks with you, or you are driving your car, you are paying for each with your attention. In order to focus enough mental energy on each task (watch a tv ad, talk with someone or drive a car) we need to block out other things so that we can use our brain to do them properly. Can you imagine trying to drive a car while watching a TV ad and having a conversation? If we spent all our attention on each at the same time, we would be short changing the amount that would be required to drive a car safely which obviously is not a good thing. In the real world we have so many different things that we can spend our attention on, but in the new online world inside the Internet there is only one thing we can spend it on, and that is information.

The internet is so full of information that we have to navigate carefully in order to not waste our attention. Of course it requires some attention to navigate, but skilled internet users know how to search for information without wasting precious time and attention. However when we are faced with serial distraction likes chat, Facebook, games and then of course the more evil types of attention grabbers like spam and popup windows we can see how easy it is to waste your attention while using the internet. Marketers have to think much more cleverly in order to capture the scarce attention of internet users, which is a hard task when you are competing with all the other information.

Because the human race is becoming more and more reliant and integrated into technology, we are going to have to focus on processing information more and more. This means spending more attention on this processing. If we want to be able to navigate and filter through irrelevant information, then we are going to have to become good at saving as much attention as we can until we find what we are looking for. Technology will become better at helping us do this, but at the end of the day, regardless of any technological achievement, we are always going to have our limited wealth of attention to spend. With all the people out there that are after it, you are going to need to use it wisely.

Site 1:

Goldhaber, Michael H. (1997, April 7). Retrieved January 28, 2009, from First Monday: http://firstmonday.org/htbin/cgiwrap/bin/ojs/index.php/fm/article/view/519/440

This site is perfect for the beginner who doesn’t understand what the attention economy is. After reading the concept, this article cleared my misunderstandings up and allowed me to build an argument. I think perhaps he tends to exaggerate how much the material economy will shrink though. For example we will never be able to be nourished from education so there will always be production to keep the material economy alive. What he is getting at is that the material economy will most probably shrink down to the bare essentials of what production needs to supply the human race.

Site 2:

Too Much Information - Take back your attention span. (2007, September 25th). Retrieved January 28th, 2009, from Download Squad: http://www.downloadsquad.com/2007/09/25/too-much-information-take-back-your-attention-span/

This site proposes some tactics in which to eliminate TMI or Too Much Information. Going forward, internet users are going to have to come up with or learn as many tactics as they can in order to block out unwanted information. Sure we have started doing this with the advent of spam filters and popup blockers, but there are other streams of information that we can look at. For example the site goes on to look at trying to rid of physical world information such as paper bills. It is a good direction to take if we want to streamline our information processing in the future.

In this kind of economy, the most valuable commodity is people’s attention (which can be, for example, bought and sold in the advertising industry): successful websites and other Internet publications / communications (says Goldhaber) are those which capture and hold the increasingly distracted attention of Internet users amidst a swirling mass of informational options.(Allen, n.d.)

As I began to investigate this concept further, I began to read Goldhaber’s paper called ‘The Attention Economy and The Net’. He introduces some ideas that not many people are aware of, namely how we make transactions with our inherent wealth of attention. His text illustrates the importance of understanding the new attention economy. As we will see, in the future the attention economy will become more important to understand.

Every day we make transactions without even reaching for our wallet. When, for example, a TV ad captures our interest, somebody comes up and talks with you, or you are driving your car, you are paying for each with your attention. In order to focus enough mental energy on each task (watch a tv ad, talk with someone or drive a car) we need to block out other things so that we can use our brain to do them properly. Can you imagine trying to drive a car while watching a TV ad and having a conversation? If we spent all our attention on each at the same time, we would be short changing the amount that would be required to drive a car safely which obviously is not a good thing. In the real world we have so many different things that we can spend our attention on, but in the new online world inside the Internet there is only one thing we can spend it on, and that is information.

The internet is so full of information that we have to navigate carefully in order to not waste our attention. Of course it requires some attention to navigate, but skilled internet users know how to search for information without wasting precious time and attention. However when we are faced with serial distraction likes chat, Facebook, games and then of course the more evil types of attention grabbers like spam and popup windows we can see how easy it is to waste your attention while using the internet. Marketers have to think much more cleverly in order to capture the scarce attention of internet users, which is a hard task when you are competing with all the other information.

Because the human race is becoming more and more reliant and integrated into technology, we are going to have to focus on processing information more and more. This means spending more attention on this processing. If we want to be able to navigate and filter through irrelevant information, then we are going to have to become good at saving as much attention as we can until we find what we are looking for. Technology will become better at helping us do this, but at the end of the day, regardless of any technological achievement, we are always going to have our limited wealth of attention to spend. With all the people out there that are after it, you are going to need to use it wisely.

Site 1:

Goldhaber, Michael H. (1997, April 7). Retrieved January 28, 2009, from First Monday: http://firstmonday.org/htbin/cgiwrap/bin/ojs/index.php/fm/article/view/519/440

This site is perfect for the beginner who doesn’t understand what the attention economy is. After reading the concept, this article cleared my misunderstandings up and allowed me to build an argument. I think perhaps he tends to exaggerate how much the material economy will shrink though. For example we will never be able to be nourished from education so there will always be production to keep the material economy alive. What he is getting at is that the material economy will most probably shrink down to the bare essentials of what production needs to supply the human race.

Site 2:

Too Much Information - Take back your attention span. (2007, September 25th). Retrieved January 28th, 2009, from Download Squad: http://www.downloadsquad.com/2007/09/25/too-much-information-take-back-your-attention-span/

This site proposes some tactics in which to eliminate TMI or Too Much Information. Going forward, internet users are going to have to come up with or learn as many tactics as they can in order to block out unwanted information. Sure we have started doing this with the advent of spam filters and popup blockers, but there are other streams of information that we can look at. For example the site goes on to look at trying to rid of physical world information such as paper bills. It is a good direction to take if we want to streamline our information processing in the future.

Wednesday, January 21, 2009

MODULE FOUR - Flash

Flash is one of the most exciting programs on the net, when in the hands of a really good designer. Everyone has seen those sh**ty 'shoot the monkey to win an iPhone' advertisements, and that is not what I'm talking about. I'm talking turning a web page into something more dynamic than just articles and textual information.

For example recently I've noticed a lot of advertisement popups on news.com.au. Normally I'd oppose to these obtrusive popups, but lately there has been a lot of innovation that made me take notice. For example I noticed an Intel ad recently that seemed to be just this little box among the sea words and pictures. Then all of a sudden the letters in the flash ad exploded and letters went flying right across the page to my amazement. This is made possible by Javascript manipulating div's in order to make the flash area bigger for a moment. Because the DIV is transparent, as is the background of the flash movie, it gives the impression that the flash advertisement was able to break out of it's containment, when in fact it momentarily took control of the whole area of the browser.

I also like it when I find a movie trailer advertisement on a webpage... on one condition... I want to be the one who initiates the download and playing of the movie. Lately a lot of movies just play themselves and you only have the choice of turning the sound on. Now if god bestows his wrath upon the world once more and I have to see Paris Hilton's skull on the TV spots, I don't want to go to news.com.au and have my bandwidth and CPU power wasted on her mediocre (is that too generous) acting skills. Is there not a rule or something that web designers need to ethically follow, where you don't force something upon somebody in a web page?

Anyway, Flash is cool, it's here to stay and as speeds and processing power gets better, I think it's going to develop into an extremely powerful programming platform.

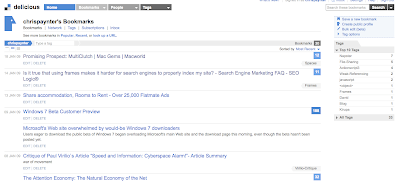

MODULE FOUR - Bookmark Manager

I'm personally not a big fan of storing information like bookmarks on my computer. The reason being that when I format my computer, 9 times out of 10 I'll forget to save my bookmarks. That's what got me onto using delicious. It's a web based bookmark manager that has many other benefits than your normal browser gives you.

For starters, delicious is a social network. Not in the same sense as what Facebook is, but rather it is more focused on sharing bookmarks. The strength of this social network is the ability of seeking bookmark rankings. The more users that have bookmarked a particular URL, the more likely that the URL contains valid and relevant information.

Your able to search for bookmarks in delicious just like pages in Google. Delicious works by searching through the tags that users gives their bookmarks. For example if I bookmarked a page on the Joe Satriani Ibanez guitar then I would give it the tags 'Satriani', 'Ibanez' and 'Guitar'. I left 'joe' out because it is quite an abitrary tag that wouldn't give as relevant a result as what 'Satriani' would. In fact the whole searching capabilities of Delicious rely on a tags level of relevance to the bookmark it is referencing.

The other bonus of delicious is that you can access your bookmarks from any computer in the world. All you do is go to the delicious site and log in and all your bookmarks are displayed in your profile, where you can search through your tags or create notes to remind you why you bookmarked a particular page.

The future is cloud computing, and I really feel that the more information I can unchain from my computer (as long as it is secured) the better!

If you'd like to jump on the delicious band wagon head on over to http://delicious.com/

There's also an extension for Firefox that makes using delicious easy as one could want.

Subscribe to:

Posts (Atom)